The Secrets of ChatGPT’s AI Training: A Look at the High-Tech Hardware Behind It

Table of contents

- Introduction to ChatGPT’s AI Training

- The Role of Hardware in AI Training

- CPU vs. GPU for AI Training

- Exploring ChatGPT’s GPUs

- Exploring ChatGPT’s memory, storage and interconnects

- Exploring ChatGPT’s memory and storage technologies

- Exploring ChatGPT’s interconnect technologies

- Techniques for Overcoming Hardware Limitations

- The Future of AI Hardware

- Conclusion: The Impact of Advanced Hardware on ChatGPT's AI Training

- Reference

Introduction to ChatGPT’s AI Training

ChatGPT is an artificial-intelligence chatbot developed by OpenAI and launched in November 2022. It is built on top of OpenAI’s GPT-3 family of large language models and has been fine-tuned using both supervised and reinforcement learning techniques. ChatGPT can respond to general and technical questions as well as engage in natural conversations with users.

In this blog post, we will explore how ChatGPT’s AI training works and what kind of hardware it requires. We will also compare different types of hardware for AI training and see how ChatGPT leverages them to achieve high performance and efficiency.

The Role of Hardware in AI Training

Hardware plays a crucial role in AI training, as it determines how fast and how well a model can learn from data. The hardware requirements for training and using AI algorithms can vary significantly depending on the type, size, complexity, and purpose of the model.

Training, which involves recognizing patterns in large amounts of data, typically requires more processing power and can benefit from parallelization. Once the model has been trained, the computing requirements may decrease.

Some of the main hardware components that affect AI training are:

Processor (CPU): The central processing unit executes instructions from software programs and coordinates other components. It defines the platform to support other hardware devices.

Video Card (GPU): The graphics processing unit specializes in performing parallel computations on large arrays of data. It has become the driving force behind many advancements in machine learning and AI research since the mid 2010s.

Memory (RAM): The random access memory stores data temporarily for fast access by the CPU or GPU. It affects how much data can be processed at once.

Storage (Hard Drives): The storage devices store data permanently for later retrieval by other components. They affect how quickly data can be accessed and transferred.

Interconnects: The interconnects are cables or circuits that connect different hardware devices together. They affect how fast data can be communicated between components.

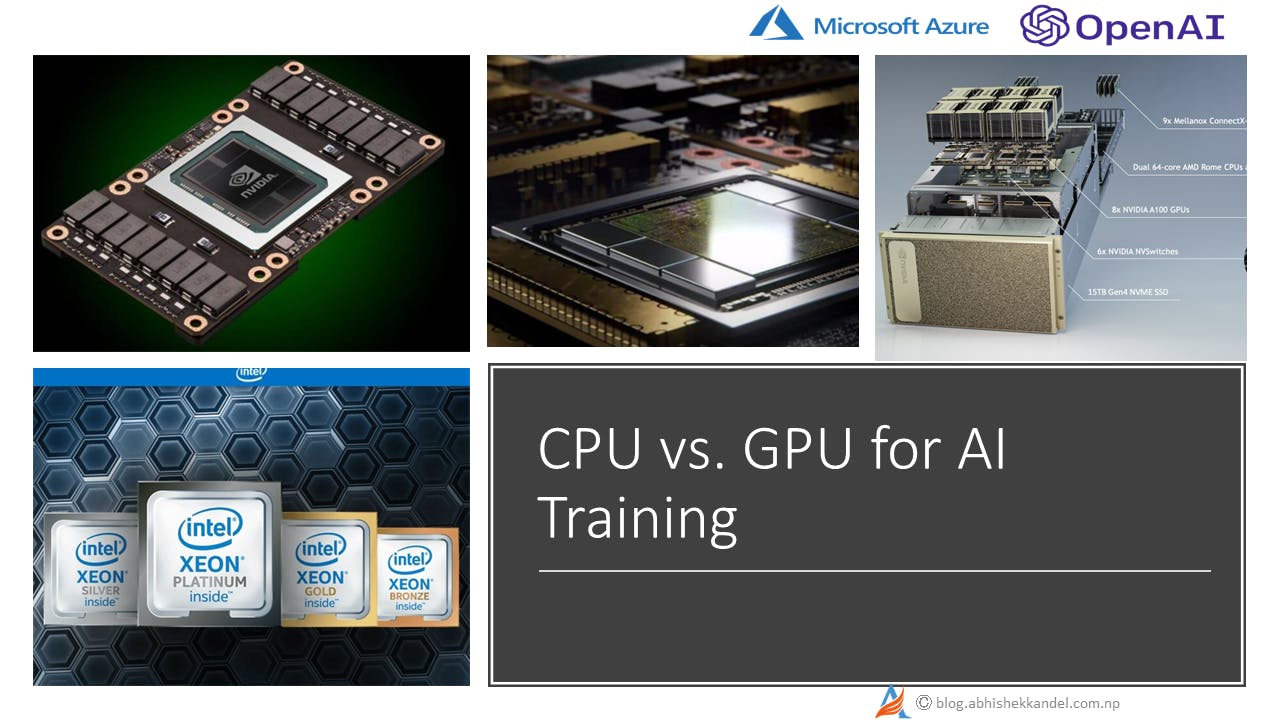

CPU vs. GPU for AI Training

One of the most common questions about hardware for AI training is whether to use a CPU or a GPU.

A CPU is designed to handle a variety of tasks efficiently but not necessarily quickly. It has fewer but more powerful cores that can execute complex instructions sequentially or concurrently.

A GPU is designed to handle graphics-intensive tasks quickly but not necessarily efficiently. It has many but less powerful cores that can execute simple instructions simultaneously or in parallel.

For AI training, a GPU usually outperforms a CPU because it can process large amounts of data faster using parallelization techniques such as matrix multiplication or convolutional operations.

However, a CPU may still be useful for some tasks that require more flexibility or logic such as preprocessing data or running custom code.

Exploring ChatGPT’s GPUs

ChatGPT relies heavily on GPUs for its AI training, as they can handle massive amounts of data and computations faster than CPUs. According to industry sources, ChatGPT has imported at least 10,000 high-end NVIDIA GPUs and drives sales of Nvidia-related products to $3 billion to $11 billion within 12 months.

One of the most powerful GPUs that ChatGPT uses is the NVIDIA A100 HPC (high-performance computing) accelerator. This is a $12,500 tensor core GPU that features high performance, HBM2 memory (80GB of it) capable of delivering up to 2TBps memory bandwidth, enough to run very large models and datasets.

However, even with such a powerful GPU, ChatGPT still faces some challenges in scaling up its AI training. For example, on a single multi-GPUs server, PyTorch can only launch ChatGPT based on small models like GPT-L (774M), due to the complexity and memory fragmentation of ChatGPT. Hence, multi-GPUs parallel scaling to 4 or 8 GPUs with PyTorch’s DistributedDataParallel (DDP) results in limited performance gains.

Exploring ChatGPT’s memory, storage and interconnects

Besides GPUs, ChatGPT also uses other hardware components to enhance its AI training performance. These include memory, storage and interconnects.

Memory is used to store data and instructions that are needed for processing by the CPU or GPU. Storage is used to save data and models that are not currently in use by the CPU or GPU. Interconnects are used to transfer data and instructions between different hardware components.

ChatGPT requires a lot of memory and storage capacity to handle its large models and datasets. For example, ChatGPT-3 has 175 billion parameters and requires 45 terabytes of data for training. This is beyond the memory capacity of even the largest GPUs used to train the systems, requiring multiple processors to be running in parallel.

Exploring ChatGPT’s memory and storage technologies

ChatGPT uses a combination of different types of memory and storage technologies to optimize its AI training performance. These include:

High bandwidth memory (HBM): This is a type of memory that is integrated with the GPU chip, providing faster data transfer and lower power consumption than traditional memory modules. ChatGPT uses HBM to store its large models and parameters that need to be accessed quickly by the GPU. According to Samsung and SK Hynix, ChatGPT has increased their HBM orders significantly.

Solid state drive (SSD): This is a type of storage device that uses flash memory chips instead of spinning disks, offering faster read and write speeds and lower latency than hard disk drives (HDDs). ChatGPT uses SSDs to store its large datasets that need to be loaded quickly by the CPU or GPU. SSDs also have lower failure rates and longer lifespans than HDDs.

Cloud storage: This is a type of storage service that allows users to store data on remote servers over the internet, offering scalability, accessibility and security benefits. ChatGPT uses cloud storage to backup its data and models that are not currently in use by the CPU or GPU. Cloud storage also allows ChatGPT to share its data and models with other users and applications easily.

Exploring ChatGPT’s interconnect technologies

Interconnects are used to transfer data and instructions between different hardware components, such as CPUs, GPUs, memory and storage devices. Interconnects can affect the speed and efficiency of data processing and communication.

ChatGPT uses a combination of different types of interconnect technologies to optimize its AI training performance. These include:

PCI Express (PCIe): This is a type of interconnect that connects peripheral devices, such as GPUs and SSDs, to the motherboard of a computer system. PCIe offers high bandwidth and low latency for data transfer. ChatGPT uses PCIe to connect its GPUs and SSDs to its CPUs.

NVLink: This is a type of interconnect that connects multiple GPUs together, allowing them to share memory and work as a single unit. NVLink offers higher bandwidth and lower latency than PCIe for GPU-to-GPU communication. ChatGPT uses NVLink to connect its GPUs together for parallel processing.

Ethernet: This is a type of interconnect that connects multiple computer systems together over a network, such as the internet. Ethernet offers scalability and reliability for data communication. ChatGPT uses Ethernet to connect its computer systems together for distributed processing.

Techniques for Overcoming Hardware Limitations

While advanced hardware can greatly improve the performance and efficiency of AI training, it also comes with some challenges and limitations that need to be addressed. Some of these challenges include:

Privacy and security: AI training often requires access to large amounts of sensitive data, such as personal information, medical records, or financial transactions. This poses a risk of data breaches or misuse by malicious actors. To protect the data and the results of AI training, some techniques that can be used are:

Cryptographic algorithms: These are methods that encrypt the data or the computations using secret keys, so that only authorized parties can access them.

Data masking: This is a technique that replaces sensitive data with fake or anonymized data that preserves its statistical properties.

Synthetic data: This is a technique that generates artificial data that mimics the characteristics and distribution of real data.

Differential privacy: This is a technique that adds random noise to the data or the results of AI training, so that individual records cannot be identified or inferred

Scalability and complexity: AI training often involves complex models with millions or billions of parameters, which require large amounts of computational resources and time to train. To scale up AI training and reduce its complexity, some techniques that can be used are:

Distributed computing: This is a technique that divides the AI training task into smaller subtasks and distributes them across multiple devices or servers.

Parallel computing: This is a technique that performs multiple computations simultaneously using multiple cores or processors.

Model compression: This is a technique that reduces the size or complexity of an AI model without compromising its accuracy or functionality. Some examples of model compression are pruning (removing unnecessary parameters), quantization (reducing the precision of parameters), and distillation (transferring knowledge from a larger model to a smaller one).

Memory bottleneck: AI training often requires loading large amounts of data into memory for processing, which can exceed the memory capacity or bandwidth of hardware devices. This can result in slow performance or memory errors. To overcome this memory bottleneck, some techniques that can be used are:

Data streaming: This is a technique that processes data in small batches as they arrive instead of loading them all at once into memory.

Data prefetching: This is a technique that anticipates which data will be needed next and loads them into memory in advance.

Memory optimization: This is a technique that reduces the memory footprint of an AI model by using efficient data structures, algorithms, or libraries.

The Future of AI Hardware

As AI becomes more ubiquitous and powerful in various domains and applications, there is an increasing demand for more advanced hardware solutions to support its development and deployment. Some trends and directions for future AI hardware include:

Specialized chips: These are chips designed specifically for certain types of AI tasks, such as image recognition, natural language processing, or reinforcement learning. They can offer higher performance, lower power consumption, and lower cost than general-purpose chips. Some examples of specialized chips for AI are:

ASICs: Application-specific integrated circuits that are designed to do one specific type of calculation very quickly. They can be used for things like Bitcoin mining, video encoding, or running specific artificial intelligence tasks.

GPUs: Graphics processing units that are originally designed for rendering graphics but can also perform parallel computations on large arrays of data. They are widely used for deep learning and other AI applications that require high computational power.

FPGAs: Field-programmable gate arrays that are reconfigurable circuits that can be customized for different types of computations. They can offer more flexibility and adaptability than ASICs or GPUs but also require more programming skills and resources.

Edge computing: This is a paradigm that moves some of the AI processing from centralized servers or clouds to local devices or nodes near the data sources. This can reduce latency, bandwidth consumption, and privacy risks associated with sending data over networks. Some examples of edge devices that can run AI algorithms are:

Smartphones: These are handheld devices that have built-in cameras, sensors, and processors that can enable various AI applications such as face recognition, speech recognition, or augmented reality.

Smart cameras: These are cameras that have embedded processors and memory that can perform image analysis and object detection without relying on external servers or clouds.

IoT devices: These are devices that are connected to the internet and can collect and transmit data from their environments. They can also run AI algorithms locally to perform tasks such as anomaly detection, predictive maintenance, or smart home automation.

Neuromorphic computing: This is a branch of computing that mimics the structure and function of biological neural networks in the brain. It aims to create hardware devices that can learn from data and adapt to changing conditions without explicit programming. Some potential benefits of neuromorphic computing are:

Energy efficiency: Neuromorphic chips can consume much less power than conventional chips by using spiking neurons and synapses that only activate when needed.

Learning ability: Neuromorphic chips can learn from their inputs and outputs without requiring labeled data or predefined models. They can also adjust their parameters dynamically based on feedback signals.

Fault tolerance: Neuromorphic chips can tolerate noise and errors by using redundancy and diversity in their components.

These are some of the main types of specialized chips for AI and their applications. The impact of advanced hardware on AI is significant, as it can enable faster, cheaper, and more scalable AI solutions for various domains and challenges. Some of the trends and benefits of hardware for AI are:

Accelerating application development: Hardware for AI can orchestrate and coordinate computations among accelerators, serving as a differentiator in AI.It can also reduce the time and cost of training and deploying AI models by providing specialized platforms and tools.

Enabling pervasive computing: Hardware for AI can support the increasingly multi-faceted functionality of AI systems, such as storage, memory, logic, and networking. It can also enable edge computing by allowing devices to run AI algorithms locally without relying on external servers or clouds.

Becoming an omnipresent feature of everyday life: Hardware for AI can empower various applications that affect our daily lives, such as smartphones, voice-activated assistants, smart cameras, IoT devices, robotics, healthcare, security, education, entertainment, etc..

Conclusion: The Impact of Advanced Hardware on ChatGPT's AI Training

The impact of advanced hardware on ChatGPT’s AI training is significant, as it can enable faster, cheaper, and more scalable training of large language models like GPT-3.5 that form the basis of ChatGPT. Some of the benefits of advanced hardware for ChatGPT’s AI training are:

Accelerating training time: Advanced hardware can reduce the time required to train large language models by providing specialized chips that can perform parallel computations on large arrays of data. For example, GPUs can offer higher performance than CPUs for deep learning tasks.

Reducing training cost: Advanced hardware can reduce the cost associated with training large language models by providing specialized platforms and tools that can optimize resource utilization and efficiency. For example, ASICs can offer lower power consumption and lower cost than general-purpose chips.

Enabling larger-scale training: Advanced hardware can enable larger-scale training of large language models by providing more memory, storage, logic, and networking capabilities. For example, FPGAs can offer more flexibility and adaptability than ASICs or GPUs but also require more programming skills and resources.

Reference

ChatGPT - Wikipedia. [online]. Available at: https://en.wikipedia.org/wiki/ChatGPT..

ChatGPT. [online]. Available at: https://openai.com/blog/chatgpt..

ChatGPT Online. [online]. Available at: https://chatgpt.en.softonic.com/web-apps?ex=DINS-635.2..

AI Hardware | IBM Research. [online]. Available at: https://research.ibm.com/topics/ai-hardware..

Hardware Recommendations for Machine Learning / AI | Puget Systems. [online]. Available at: https://www.pugetsystems.com/solutions/scientific-computing-workstations/machine-learning-ai/hardware-recommendations/..

What is AI hardware? Approaches, advantages and examples. [online]. Available at: https://the-decoder.com/what-is-ai-hardware-approaches-advantages-and-examples/..

Open source solution replicates ChatGPT training process! Ready to go with only 1.6GB GPU memory and gives you 7.73 times faster training! | DeepAI. [online]. Available at: https://deepai.org/guide/open-source-chatgpt..

Meet the Nvidia GPU that makes ChatGPT come alive | TechRadar. [online]. Available at: https://www.techradar.com/best/heres-the-dollar13k-nvidia-gpu-that-makes-chatgpt-come-alive..

ChatGPT: Will compute power become bottleneck to AI growth?. [online]. Available at: https://techmonitor.ai/technology/ai-and-automation/chatgpt-ai-compute-power..

ChatGPT's Demand for High-Performance Memory Chips on the Rise - DRex Electronics. [online]. Available at: https://www.icdrex.com/chatgpts-demand-for-high-performance-memory-chips-on-the-rise/..

Infrastructure to support Open AI's ChatGPT could be costly | TechTarget. [online]. Available at: https://www.techtarget.com/searchenterpriseai/news/365531813/Infrastructure-to-support-Open-AIs-ChatGPT-could-be-costly..

Wikipedia's Jimmy Wales talks ChatGPT; Key technologies used in the war in Ukraine | LinkedIn. [online]. Available at: https://www.linkedin.com/pulse/wikipedias-jimmy-wales-talks-chatgpt-key-technologies-used/..

ChatGPT is a new AI chatbot that can answer questions and write essays. [online]. Available at: https://www.cnbc.com/2022/12/13/chatgpt-is-a-new-ai-chatbot-that-can-answer-questions-and-write-essays.html..

4 Ways to Overcome Deep Learning Challenges in 2023. [online]. Available at: https://research.aimultiple.com/deep-learning-challenges/..

How to overcome the limitations of AI | TechTarget. [online]. Available at: https://www.techtarget.com/searchcio/feature/How-to-overcome-the-limitations-of-AI..

Top 5 AI Implementation Challenges and How to Overcome Them — ITRex. [online]. Available at: https://itrexgroup.com/blog/artificial-intelligence-challenges/..

AI Chips: What They Are and Why They Matter - Center for Security and Emerging Technology. [online]. Available at: https://cset.georgetown.edu/publication/ai-chips-what-they-are-and-why-they-matter/..

AI chips: What they are, how they work, and which ones to choose? - Edged 🟩 Making machines intelligent since 2017. [online]. Available at: https://edged.ai/blog/ai-chips/..

Available at: https://www.reuters.comtechnology/nvidia-results-show-its-growing-lead-ai-chip-race-2023-02-23/..

AI hardware - What they are and why they matter in 2022. [online]. Available at: https://roboticsbiz.com/ai-hardware-what-they-are-and-why-they-matter-in-2020/..

Hardware for Artificial Intelligence | Frontiers Research Topic. [online]. Available at: https://www.frontiersin.org/research-topics/15184/hardware-for-artificial-intelligence..

The 7 Biggest Artificial Intelligence (AI) Trends In 2022. [online]. Available at: https://www.forbes.com/sites/bernardmarr/2021/09/24/the-7-biggest-artificial-intelligence-ai-trends-in-2022/?sh=277689f92015..